Hadley

Hadley is a

popular figure, and rightly so as he successfully introduced many newcomers to the wonders offered by

R. His approach strikes some of us old greybeards as wrong---I particularly take exception with some of his writing which frequently portrays a particular approach as both

the best

and only one. Real programming, I think, is often a little more nuanced and aware of tradeoffs which need to be balanced. As a book on another language once popularized: "There is more than one way to do things." But let us leave this discussion for another time.

As the reach of the

Hadleyverse keeps

spreading, we sometimes find ourselves at the receiving end of a cost/benefit tradeoff. That is what this post is about, and it uses a very concrete case I encountered yesterday.

As

blogged earlier, the

RcppZiggurat package was updated. I had not touched it in a year, but

Brian Ripley had sent a brief and detailed note concerning something flagged by the Solaris compiler (correctly suggesting I replace

fabs() with

abs() on integer types). (Allow me to stray from the main story line here for a second to stress just how insane a work load he is carrying, essentially for all of us. R and the R community are so just so indebted to him for all his work---which makes the usual social media banter about him so unfortunate. But that too shall be left for another time.) Upon making the simple fix, and submitting to

GitHub the usual

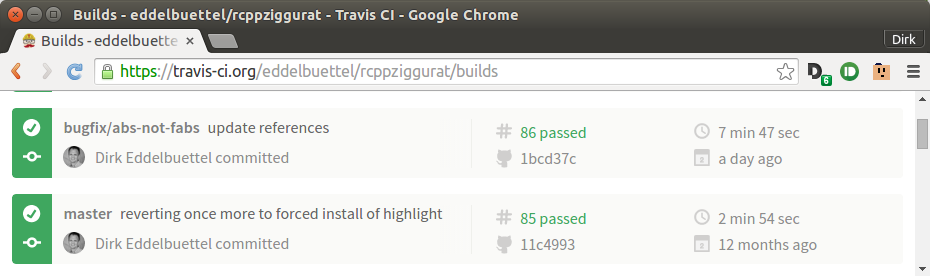

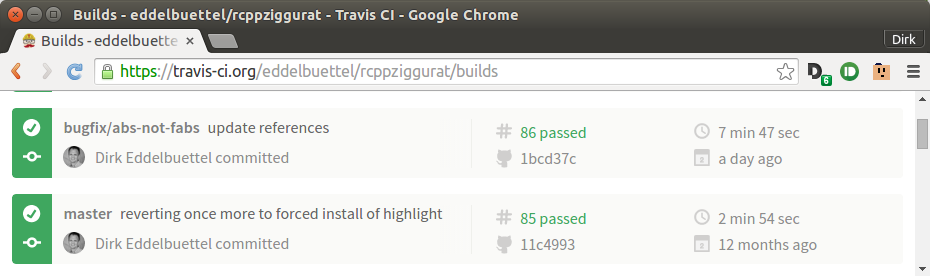

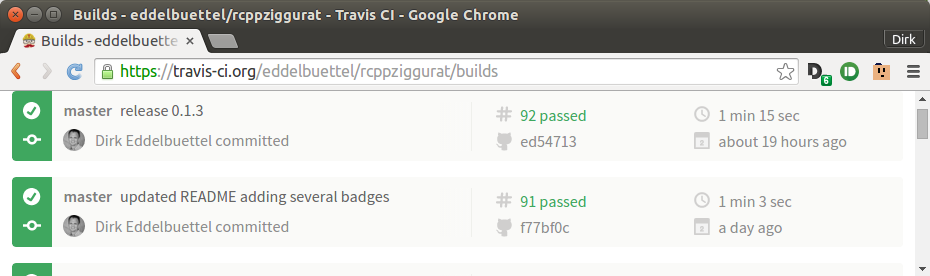

Travis CI was triggered. And here is what I saw:

All happy, all green. Previous build a year ago, most recent build yesterday, both passed. But hold on: test time went from 2:54 minutes to 7:47 minutes for an increase of almost five minutes! And I knew that I had not added any new dependencies, or altered any build options. What did happen was that among the dependencies of my package, one had decided to now also depend on

ggplot2. Which leads to a chain of sixteen additional packages being loaded besides the four I depend upon---when it used to be just one. And that took five minutes as all those packages are installed from source, and some are big and take a long time to compile.

There is however and easy alternative, and for that we have to praise

Michael Rutter who looks after a number of things for R on Ubuntu. Among these are the

R builds for Ubuntu but also the

rrutter PPA as well as the

c2d4u PPA. If you have not heard this alphabet soup before, a

PPA is a package repository for Ubuntu where anyone (who wants to sign up) can upload (properly setup) source files which are then turned into Ubuntu binaries. With full dependency resolution and all other goodies we have come to expect from the Debian / Ubuntu universe. And Michael uses this facility with great skill and calm to provide us all with Ubuntu binaries for R itself (rebuilding what yours truly uploads into Debian), as well as a number of key packages available via the CRAN mirrors. Less know however is this "c2d4u" which stands for

CRAN to Debian for Ubuntu. And this builds on something

Charles Blundell once built under my mentorship in a

Google Summer of Code. And Michael does a tremdous job covering well over a thousand

CRAN source packages---and providing binaries for all. Which we can use for Travis!

What all that means is that I could now replace the line

- ./travis-tool.sh install_r RcppGSL rbenchmark microbenchmark highlight

which implies

source builds of the four listed packages and all their dependencies with the following line implying

binary installations of already built packages:

- ./travis-tool.sh install_aptget libgsl0-dev r-cran-rcppgsl r-cran-rbenchmark r-cran-microbenchmark r-cran-highlight

In this particular case I also needed to build a binary package of my

RcppGSL package as this one is not (yet) handled by Michael. I happen to have (re-)discovered the beauty of PPAs for Travis earlier this year and revitalized an older and largely dormant

launchpad account I had for

this PPA of mine. How to build a simple .deb package will also have to left for a future post to keep this more concise.

This can be used with the existing

r-travis setup---but one needs to use the older, initial variant in order to have the ability to install .deb packages. So in the

.travis.yml of RcppZiggurat I just use

before_install:

## PPA for Rcpp and some other packages

- sudo add-apt-repository -y ppa:edd/misc

## r-travis by Craig Citro et al

- curl -OL http://raw.github.com/craigcitro/r-travis/master/scripts/travis-tool.sh

- chmod 755 ./travis-tool.sh

- ./travis-tool.sh bootstrap

to add my own PPA and all is good. If you do not have a PPA, or do not want to create your own packages you can still benefit from the PPAs by Michael and "mix and match" by installing from binary what is available, and from source what is not.

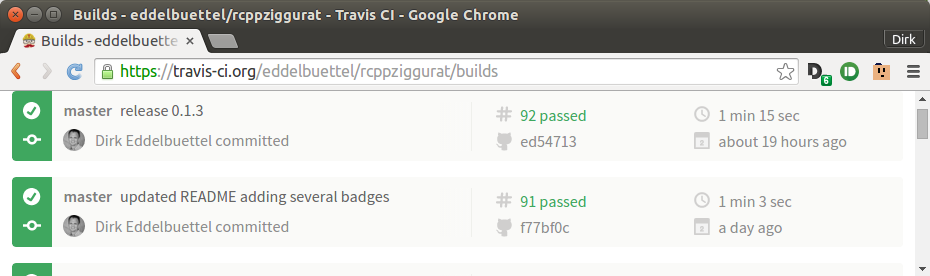

Here we were able to use an all-binary approach, so let's see the resulting performance:

Now we are at 1:03 to 1:15 minutes---much better.

So to conclude, while the every expanding universe of R packages is fantastic for us as

users, it can be seen to be placing a burden on us as

developers when installing and testing. Fortunately, the packaging infrastructure built on top of Debian / Ubuntu packages can help and

dramatically reduce build (and hence test) times. Learning about PPAs can be a helpful complement to learning about Travis and continued integration. So maybe now I need a new reason to blame

Hadley? Well, there is always

snake case ...

Follow-up: The post got some pretty immediate feedback shortly after I posted it. Craig Citro pointed out (quite correctly) that I could use r_binary_install which would also install the Ubuntu binaries based on their R packages names. Having built R/CRAN packages for Debian for so long, I am simply more used to the r-cran-* notations, and I think I was also the one contributing install_aptget to r-travis ... Yihui Xie spoke up for the "new" Travis approach deploying containers, caching of packages and explicit whitelists. It was in that very (GH-based) discussion that I started to really lose faith in the new Travis approach as they want use to whitelist each and every package. With 6900 and counting at CRAN I fear this simply does not scale. But different approaches are certainly welcome. I posted my 1:03 to 1:15 minutes result. If the "New School" can do it faster, I'd be all ears.

This post by Dirk Eddelbuettel originated on his Thinking inside the box blog. Please report excessive re-aggregation in third-party for-profit settings.

Hi, Craige.

Hi, Craige.

Debian LTS

August was the fourth month I contributed to

Debian LTS

August was the fourth month I contributed to

Sony RX100-III

Sony RX100-III